Soft Architecture Machines

Groundbreaking developments, five decades ahead of their time, by The Architecture Machine Group

Table of Contents

This post is part of the series, The other kind of A.I.

Architecture Machine Group

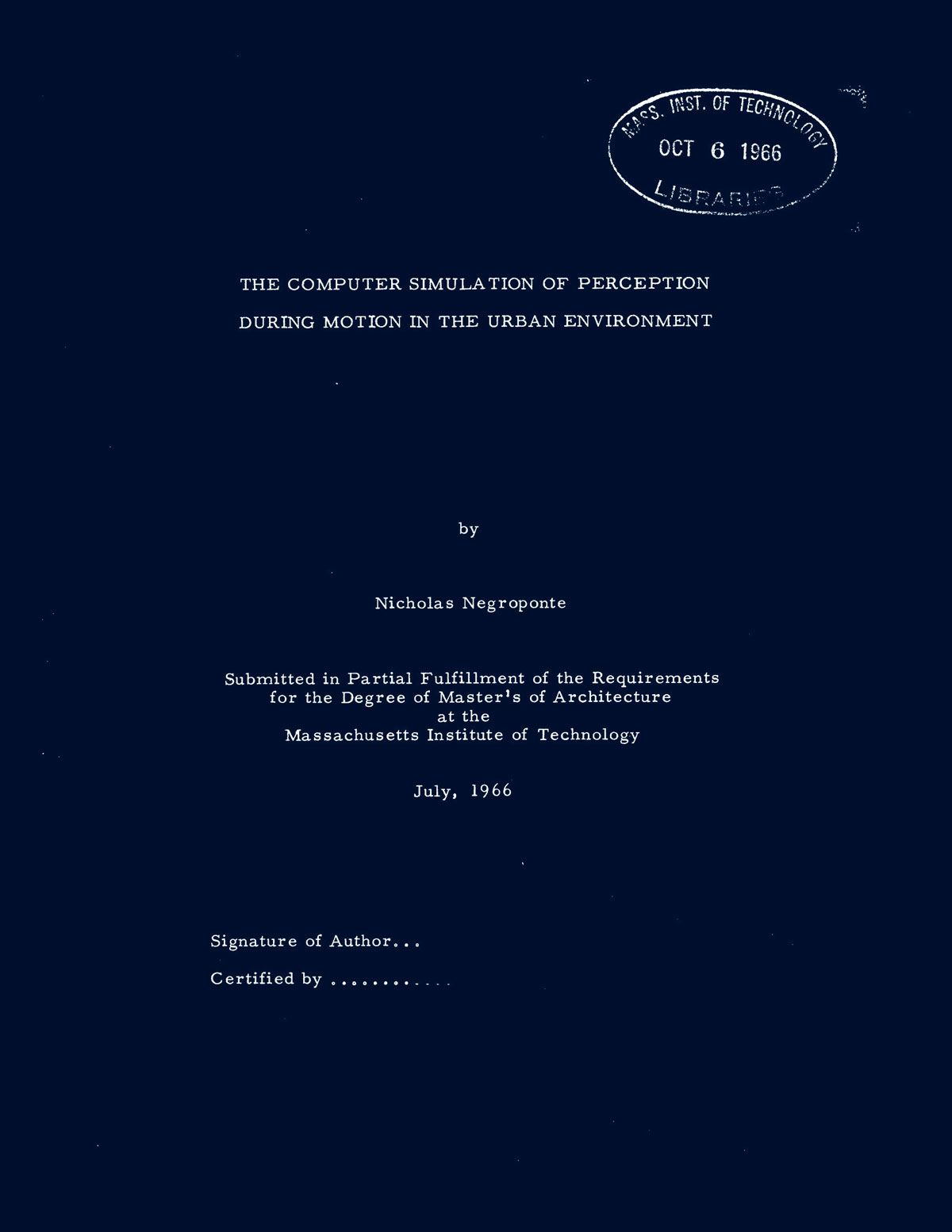

Nicholas Negroponte was trained as an architect, completing his Master's degree in 1966, with a thesis that gave a strong hint about the subject matter of his work to come.

He joined MIT’s faculty that same year, and within a few months founded the Architecture Machine Group. This book is the 1976 follow-up to The Architecture Machine of six years earlier, with both publications highlighting the experiments and outcomes of the group’s work.

Intelligence

Negroponte’s call to adventure came through his stance that “computer-aided architecture without machine intelligence would be injurious because the machine would not understand what it was aiding”.

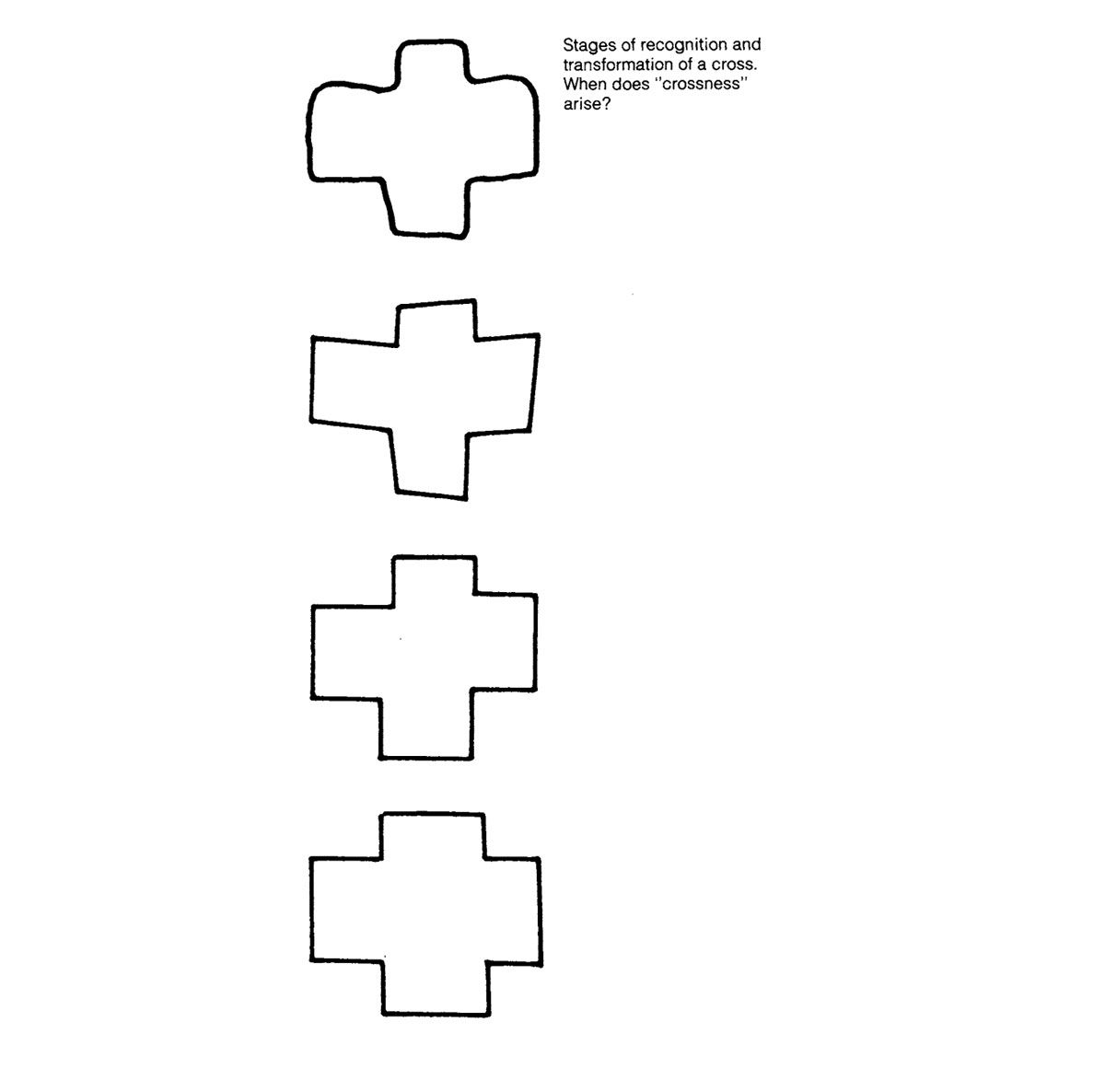

Intelligence, or at least the perception of it, would be borne out of the machine’s ability to be contextual (provide feedback relevant to the situation at hand) and to handle what Negroponte called “missing information”.

The latter skill is the more interesting of the two. It is a sort of je ne sais quoi dimension of the design process which employs intangible elements such as wisdom or intuition.

Christopher Alexander’s pattern languages were inspired by and sought to generate “a quality that cannot be named” in the built environment. This soulful/spiritual quality most frequently reveals itself, through Alexander’s examples, in spaces formed manually, at a 'human' scale.

Negroponte’s journey starts at the other end of the spectrum, with machines.

In his desire to bestow them with ‘humanity’ Negroponte is drawn away from the edges, towards the centre of the spectrum, in the neighbourhood where Alexander also eventually finds himself, as his spiritual qualities intersect with the industrial realities of today.

Both men, ultimately, sought the same thing – sincerity in liberating the hidebound practice of architecture from a cloistered elite.

Computer-aided design

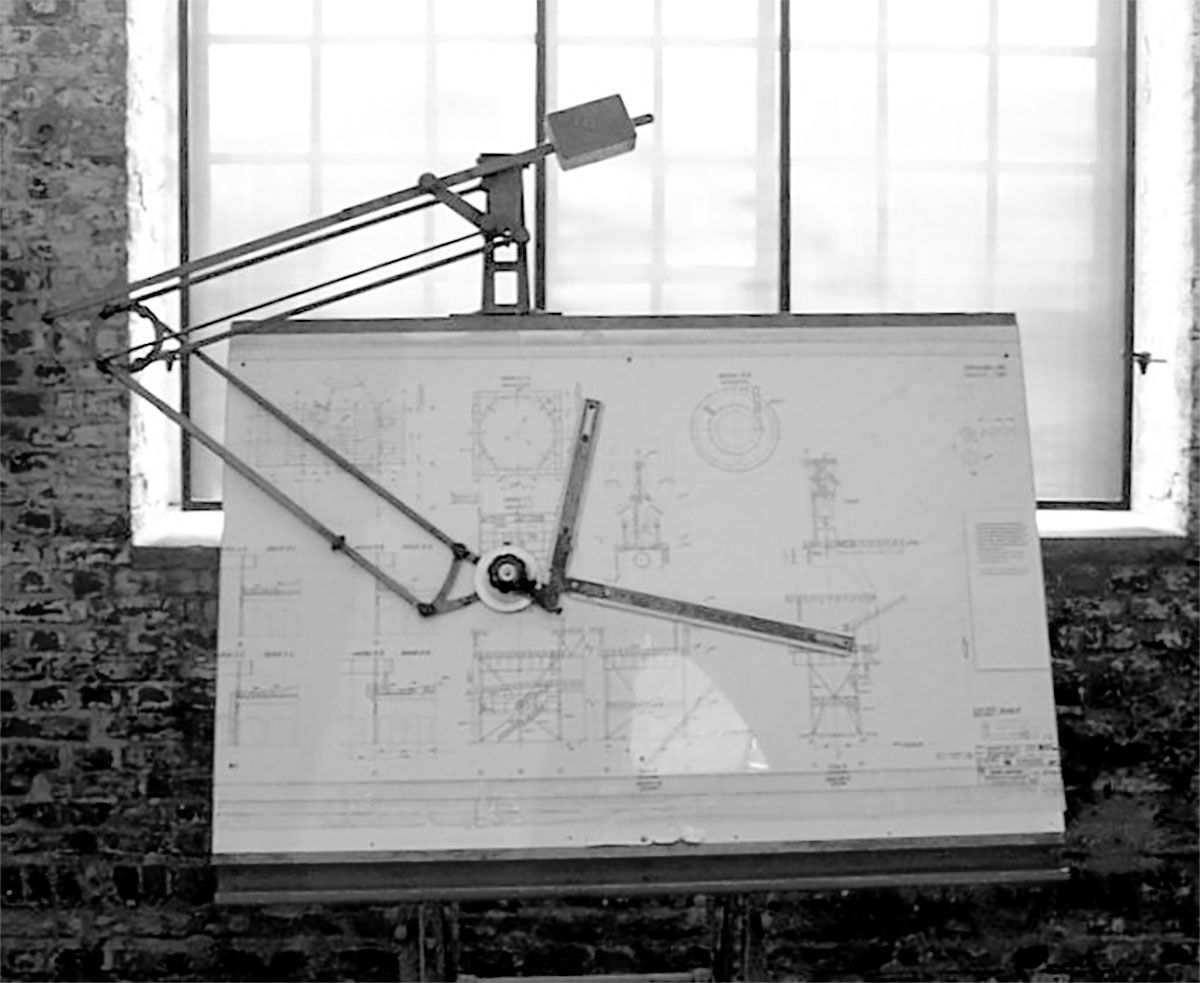

In architecture, computer-aided design (CAD) has evolved since the 1970s era of this book. However, to this day, it does not realise the full potential that Negroponte and his colleagues envisioned.

"As far as I’m concerned, machine vision and computer graphics are the same subject, even though they have been so far allocated to totally separate groups of researchers in computer science."

This statement by Negroponte boils down his driving theme to a single sentence. It is telling that only now, almost 50 years after publication, we are reigniting this sentiment and catching up with the aspirations of his early developments.

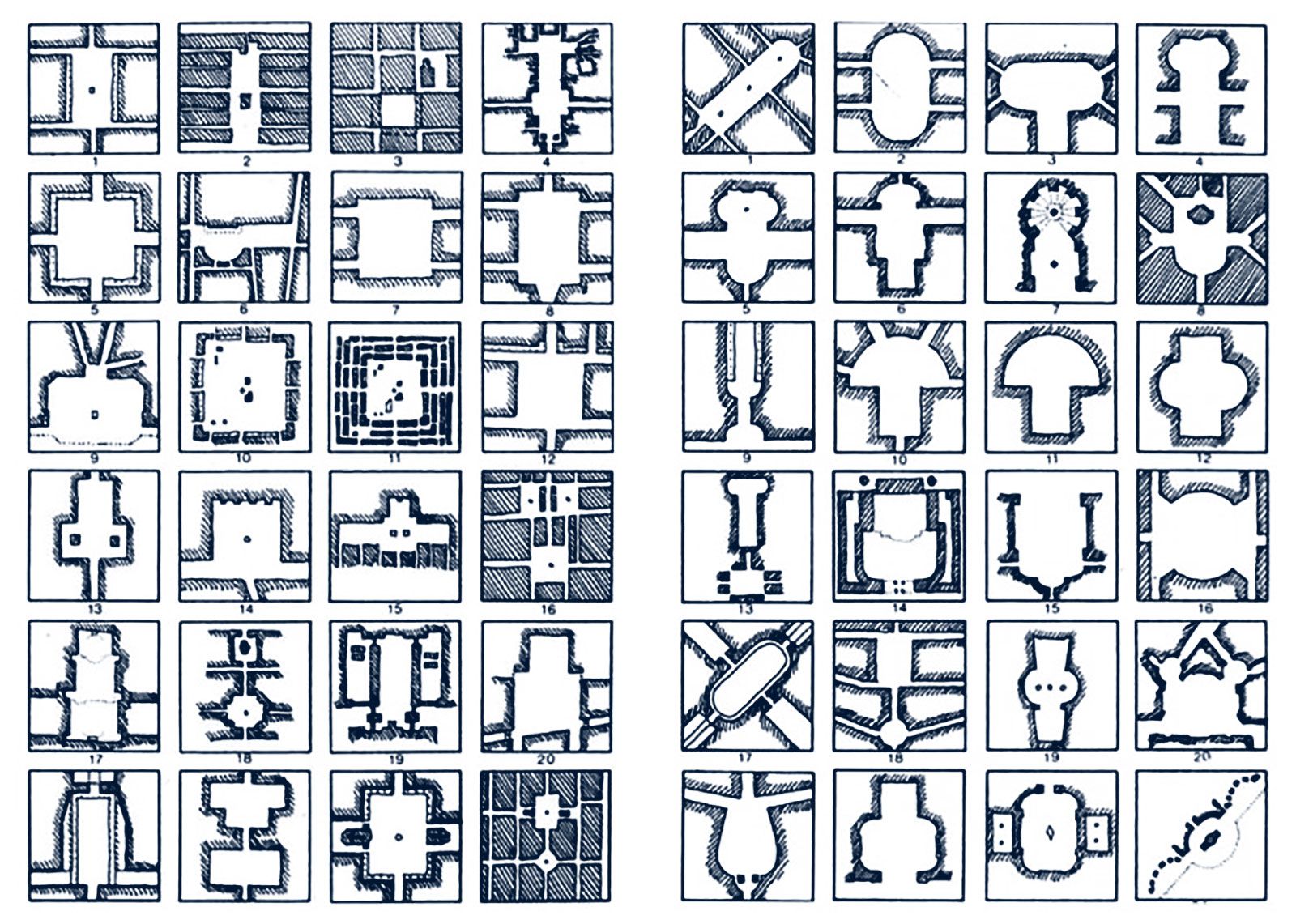

In recent decades we have gone from drawing on paper, to drawing ‘dumb’ lines on screen, to drawing ‘dumb’ three-dimensional forms on the screen, and finally, presently, ‘intelligent’ three-dimensional forms. The intelligence contained in these forms is that they recognise what they are. A line is not just a line, a cube not just a cube; but if it is ‘tagged’ as say steel, it ‘knows’ that it is steel. It can then be isolated by a structural engineer, with calculations applied to it according to its physical (visual) dimensions. It can even be ‘animated’, in context, as part of a ‘4D’ construction simulation.

'4D' BIM simulation

This, definitely, is ‘progress’, but what Negroponte envisioned was something else entirely. He saw, what we now casually call ‘A.I.’, and computer-aided design to be intertwined, to fully realise the potential of both technologies.

Where the two approaches diverged, over the years, apart from their separation in research departments, were the practical limitations of applying one versus the other. "The manoeuvres necessary to get visual information into a machine are more difficult than those required to get it out". In other words, computer vision, then in its infancy, had a much longer trajectory ahead of it, than its counterpart in display technologies.

3D rendering and visualisation has advanced by leaps and bounds, not only of course in architecture, by all the way through two the film industry and beyond. The machine vision and spatial intelligence that was meant to accompany it, has taken much longer.

De-professionalisation

Both Nicholas Negroponte and Christopher Alexander tried and failed to create an alternative path for the practice of architecture.

In Alexander’s case, it was to have been through the use of patterns, empowering non-professionals not only to produce in the built environment, but also to create living environments in the process. Alexander’s was a very handmade anarchism.

Negroponte pursued a contrasting route, away from the intimacy of the hand, towards the complexity of technology. With Negroponte’s architecture machine, we were to have been liberated from the paternalistic interventions of middle-man architects. The machine, through its intelligence, was to have empowered the average man or a woman to create in the built environment – a techno anarchism.

Incidentally, both men sought to capture qualities apparent in vernacular architecture, building activity that takes place without a professional. Why did they fail? Perhaps because both methods focused on the design process, through the prism of architectural thinking, ignoring the economic underpinning of the construction industry.

So, you have managed to draw a few spaces on your new architecture machine … How do you finance the construction? How is it insured? Who does the actual building? How will you get all of your permits passed?

These mundane questions are actually at the root of why the built environment functions the way it does. Architects, for all of their feelings of self-importance, sit on the periphery, with their core activity of ‘design’. Before they are appointed, the vast majority of critical decisions have already been made, by lawmakers, banks, local authorities and clients. The design element is mostly an embellishment of these foundational decision-making processes. Therefore, any alternatives which focus on the design process alone are bound to fail.

Negroponte rightly points out that ‘user participation’ in design typically manifests in a way that is protective to the professional. However, true de-professionalisation requires addressing the messy, mundane but fundamental issues outside of the design process.

More than just a consolation prize, even though neither Negroponte nor Alexander succeeded in transforming the practice of architecture, they both had an enormous impact in the world of tech.

Gobbledygook

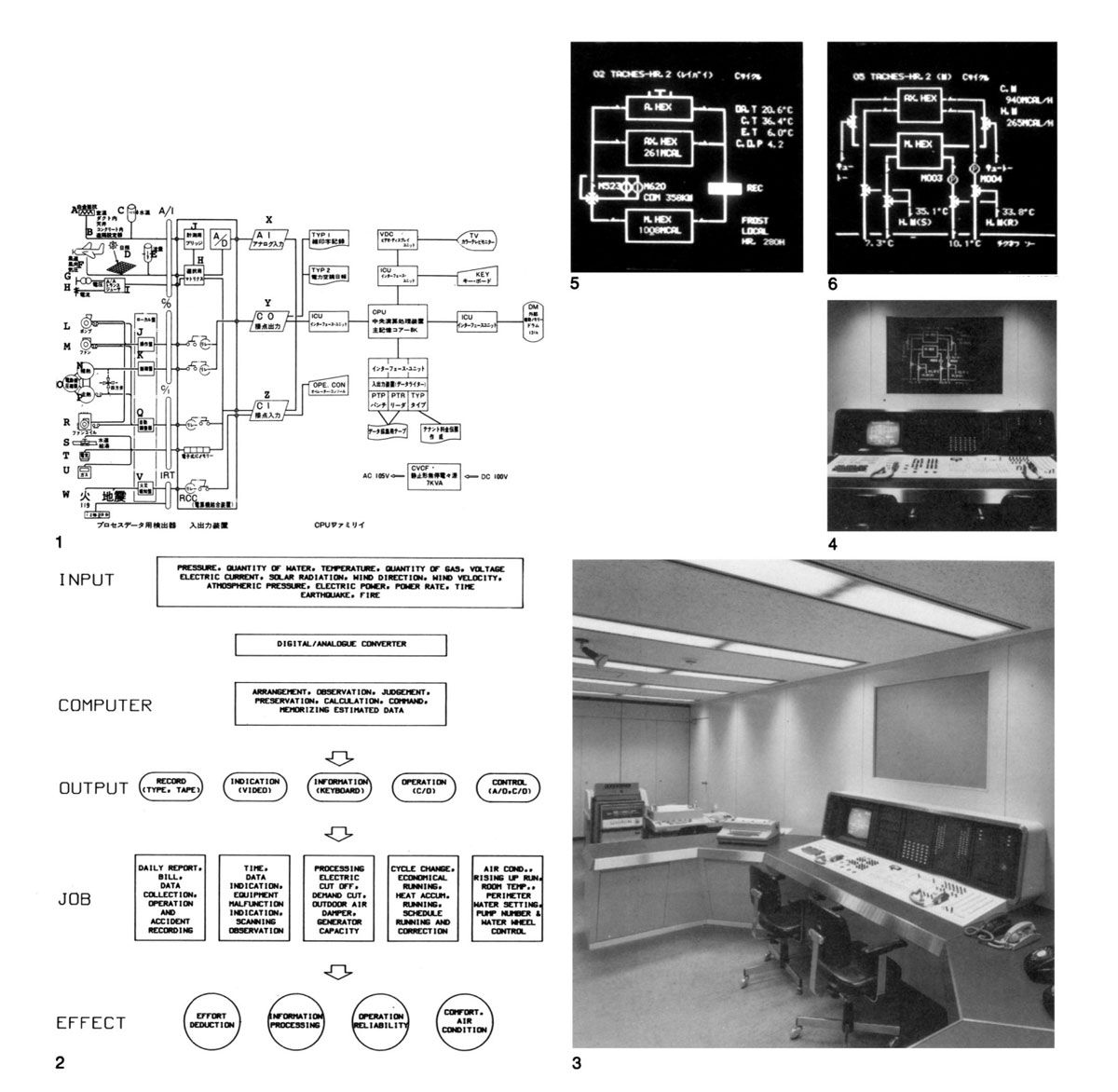

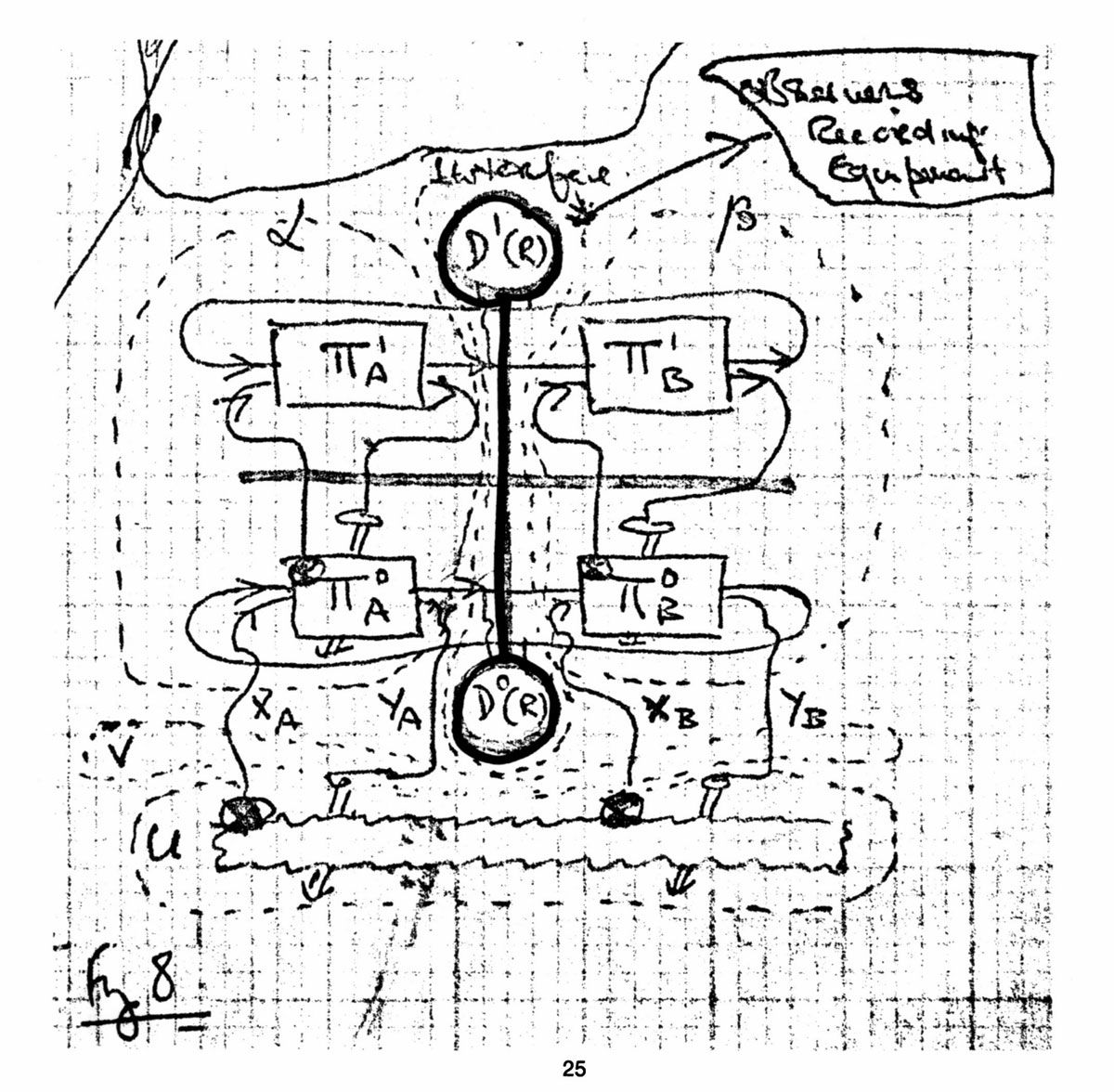

The introduction, a 30-odd page essay (mini academic paper) by cybernetician Gordon Pask, is a stark reminder that underneath the visual, three-dimensional and typically intuitive, contemporary interfaces that we experience with technologies such as augmented reality or artificial intelligence, is a level of discourse that is completely opaque to the everyday user. It is, as Pask acknowledges, a meta-language, and one that requires not only translation but a more in-depth understanding of complex theories.

In fairness, he begins with a warning, that he assumes "the reader knows the kind of symbolic operations performed by computer programs and other artefacts, that he will study the matter at leisure, or that he will take these operations for granted".

Taking them for granted it is!

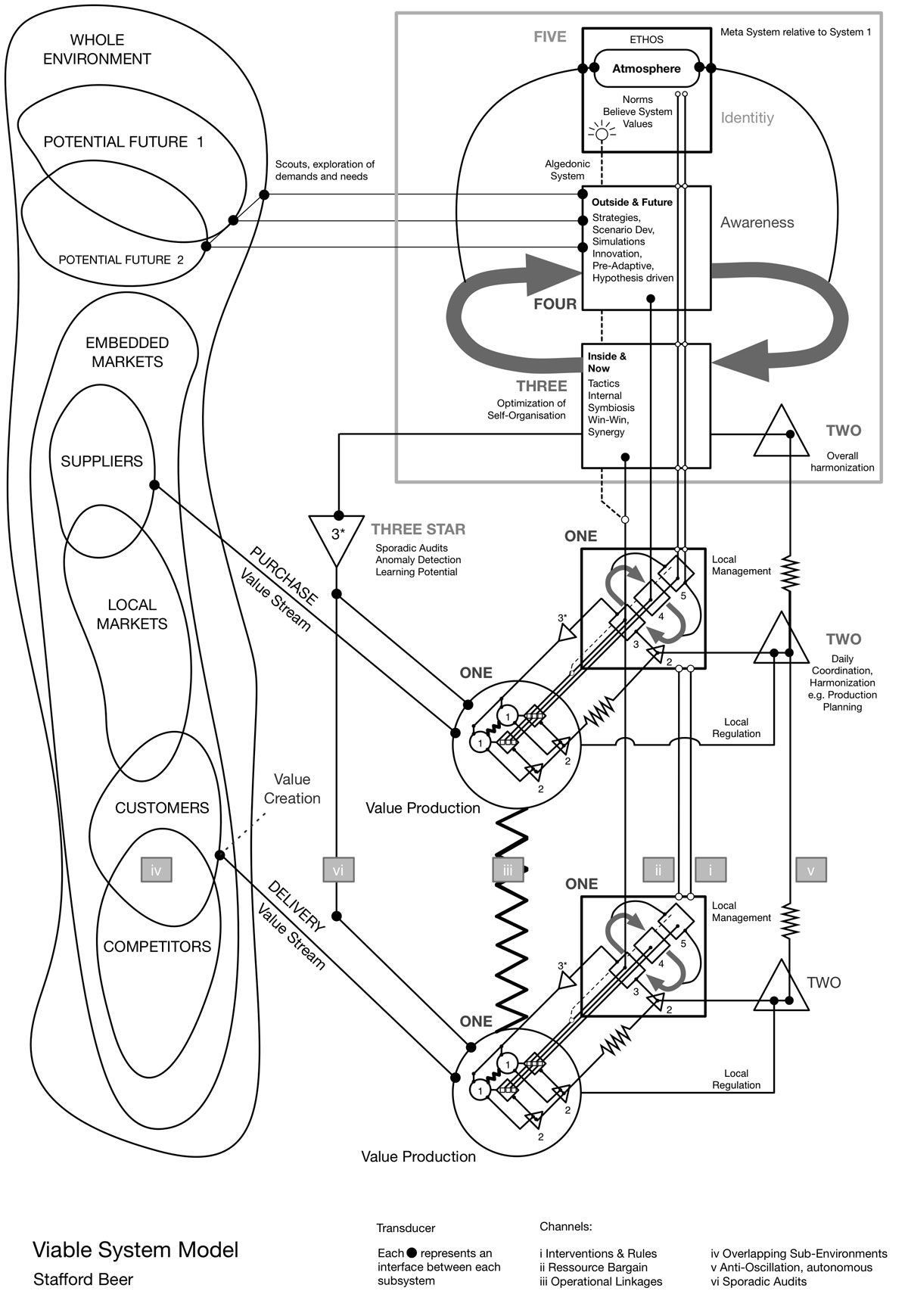

Without taking the time to digest these ideas comprehensively, we can be sure that the systems described are concerned with communication and control, the key elements of cybernetics.

To be sure, cybernetic diagrams such as these are as inaccessible to me, as an architect, as a technical, architectural drawing might be to a cybernetician. We each have our written and visual meta-languages.

However, Pask’s writing style and drawings are out of place here because they are in direct opposition to Negroponte’s mission – democratisation.

Academic vision

There is, unquestionably, a great deal of rigour in the presentation of the thoughts of Negroponte and his colleagues. Their positions in academia demanded it.

We are asked to consider examples such as ”a computer’s model of your model of its model of you”; feedback and control systems of responsive/intelligent architecture; “you-sensors”, and the like. These are all fascinating topics, explored broadly and with depth, and presented referentially and reverentially.

Perhaps, however, there comes a point when these exercises become too self-referential (and too self-reverential). History is littered with examples of unscrupulous entrepreneurs raiding the thought libraries of universities and research institutions, and commercialising their ideas. If these ideas were represented by layers of experimentation, the uppermost layers would be visible and accessible, yet completely dependent on the existence and support of the lower, more theoretical layers.

The unscrupulous entrepreneur typically skims from the top and finds practical applications out of the simple need for their products to appeal to consumers.

Academia needs some of this hard-nose energy. However it is typically stuck in the lower layers of theoretical development, with even their uppermost layers couched in academic meta-language, specifically to appeal to other academics. To be sure, universities and research institutions are not blind to this problem, and some have specific business development units. Indeed, though its funding model from business, the MIT Media Lab (successor to the Architecture Machine Group) shares its research with those businesses in ‘pre-competitive’ layers.

It is a shame though, that in reading Soft Architecture Machines, that there was such a wealth of ideas explored, with so little realised, at least not by the proponents of those ideas.

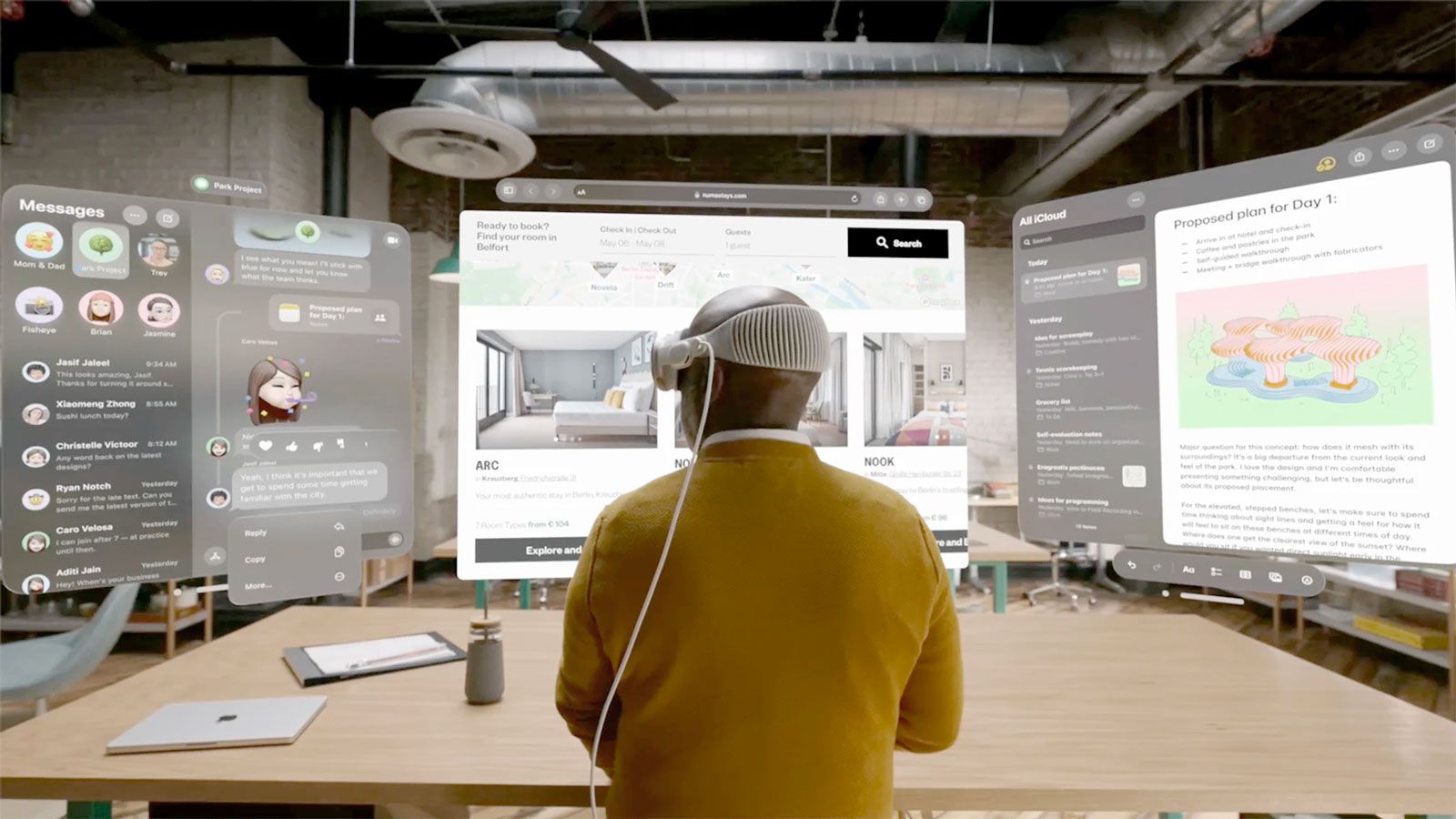

Steve Jobs infamously raided Xerox PARC for their concepts related to the graphical user interface (GUI), which eventually found their way to Apple’s GUI operating systems, and huge commercial success. The Architecture Machine Group was no less influential. However, their impact has been more on the long tail of technological development – with an example such as Dataland influencing too, the Apple operating systems of the 1980s, but still relevant to the ideas such as Apple’s most recent product of 2023, the spatial computing Vision Pro.

Hits and misses

As we approach the end of the book, the question arises, what does any of this actually have to do with architecture, and the core competency of its practitioners, crafting space? Yes, Negroponte was trained as an architect. Therefore, unsurprisingly, the tools and frames that he employs are architectural. Undoubtedly, however, the stars of the show were the computers.

It is curious now, looking back, that explorations in artificial intelligence were so intertwined with architectural thinking. But is this only because they happened to have an architect involved? The teams were so multidisciplinary, and it could be argued that the cyberneticians or the interface designers were as or more important in their work than in anything related specifically to architecture. Perhaps architectural thinking, or design thinking, helped to precipitate the whole process. Or perhaps architecture was not relevant at all. Nevertheless, Negroponte asks (but does not sufficiently answer) the most critical spatial question – “Can a machine learn without a body?”. This is a hugely significant question now, given the rapid developments of both large language models like ChatGPT (dualist in its mind focus) vs the more holistic and spatial approach by companies such as Apple.

Despite the many innovations which had a clear impact on the digital landscape of the past few decades, there were many moments in the book when one might wonder why we should care. Why was an architect exploring, in such depth, on the subject of storage tube displays, computer time-sharing, and giving names to obscure ideas on responsive materials such as “cyclics”? Why should I care? Why should anyone care?

On the one hand, Soft Architecture Machines leaves us with a feeling that some ‘classic’ musical albums might – with a couple of hits that everyone knows, and the reason that most people buy the album, but are then disappointed to find that the rest of it is obscure, dated, filler material. It is a harsh assessment, but architecture schools are guilty of the same phenomenon, with even less in the way of 'hits' and much more filler, designed for an academic audience, mostly creating filler themselves. Unsurprisingly, architectural academia is frequently accused of being detached, sometimes even by those accused of detachment themselves.

On the other hand, one could argue that the Architecture Machine Group was, and continues to be, one of the most consequential cauldrons of conceptual, technological and spatial thinking that has emerged out of an academic institution. Its successor, the hugely influential MIT Media Lab is testament to that.

The future of technology is spatial, and it is in the process of revealing itself. It interweaves elements such as machine learning (‘A.I.’), internet of things, augmented reality and more, with the key social demands of our time. The Architecture Machine Group began laying the groundwork for this revolution five decades ago.

Long live the hits. Let’s limit the misses.

Author: Nicholas Negroponte

Year of Publication: 1976

Enjoyed the read? Now watch the films.

amonle Journal

Join the newsletter to receive the latest updates in your inbox.